Learning record of Dilithium

Dilithium

| paper | 义眼顶真 | 读后感 |

|---|---|---|

| https://pq-crystals.org/dilithium/data/dilithium-specification-round3-20210208.pdf, [BDK+] | NIST implement document. | |

| [DKL+18] | similar to doc above. | |

| MUTS22 | when \(y_{i, j}\) is zero. | |

| [RJH+18] | only s_1 can also sign | |

| Lyubashevsky | Fiat-Shamir with Aborts framework | |

| DDLL13 | BLISS signature scheme | |

| https://eprint.iacr.org/2023/1891 | CPA-PoI on NTT | |

MUTS22

recovering \(s_1\) based on Multi-Layer Perceptron (MLP) machine-learning, and according to a [RJH+18], it's possible to sign using just that knowledge. the leaking point is bit-unpacking function when generating the vector y (input \(\rho^{'}\) in XOF to generate a byte string and unpacked it into \(\ell\) polynomials, who has N cocoefficients). the function use in [BDK+]:

1 | void polyz_unpack(poly *r, const uint8_t *a) { |

two phases:

- profiling phase: execute signing process with random input messages on A device and collect the power usage during the execution of the bit-unpacking function. Training their classifiers with labelled traces of sensitive internal data.

- attack phase:By observing the power traces of the signature generation on Device B, predicting the sensitive internal data, using Least Squares Method(LSM) to get a solution candidate and uncovering the secret key \(s_1\) by solving an Integer Linear Program(ILP).

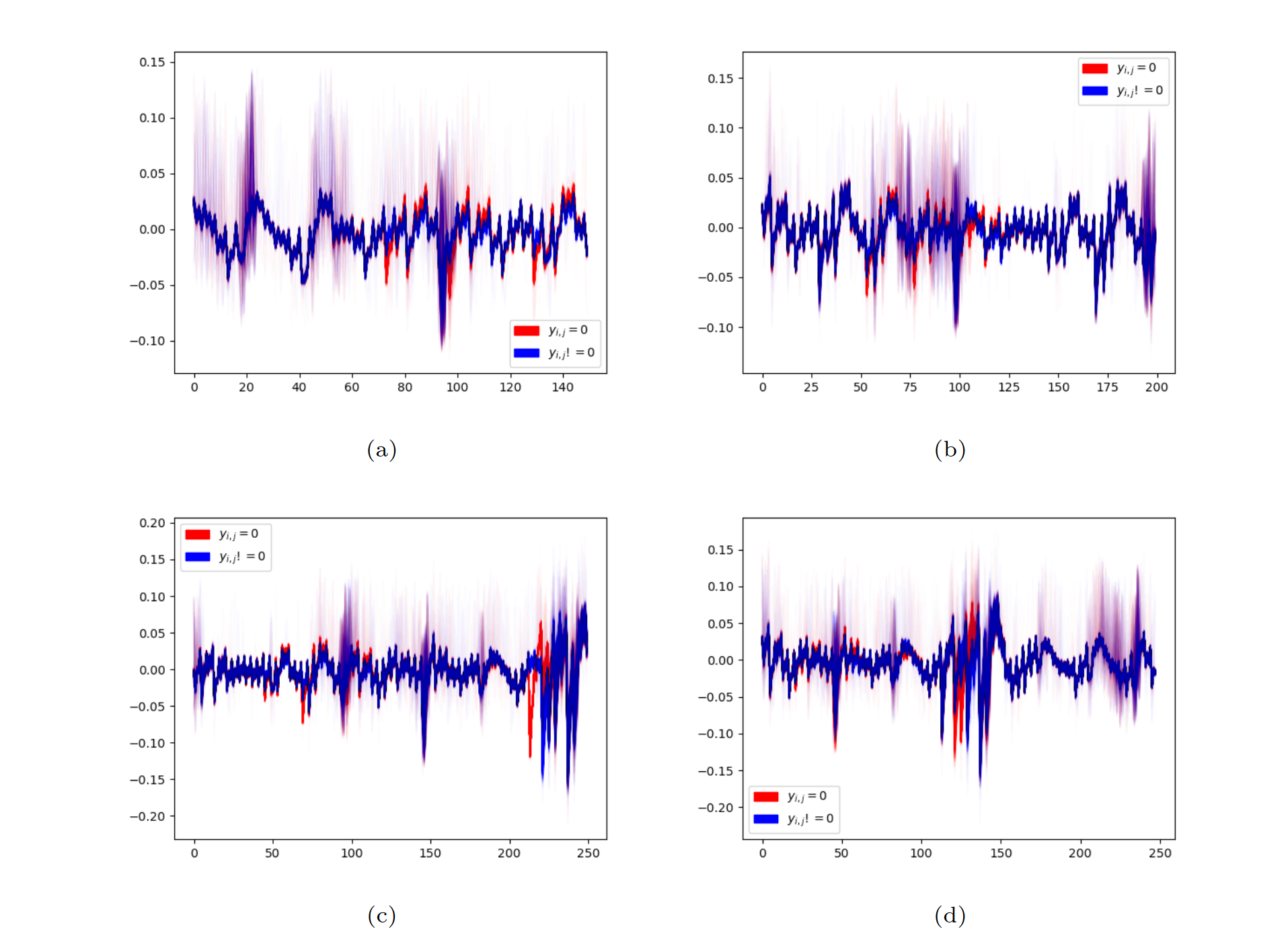

you cant distinguish the difference of an unpacking result between zero and none-zero, so they train machine-learning classifiers

(I indeed cant distinguish it)

it is obvious that when \(y_{i, j}\) is zero one can recovery the secret \(s_1\), so authors use the ml tech to distinguish whether it's zero or none-zero. when this is done, thing's getting easy.